Ian Cheng: BOB

Interaction System for AI

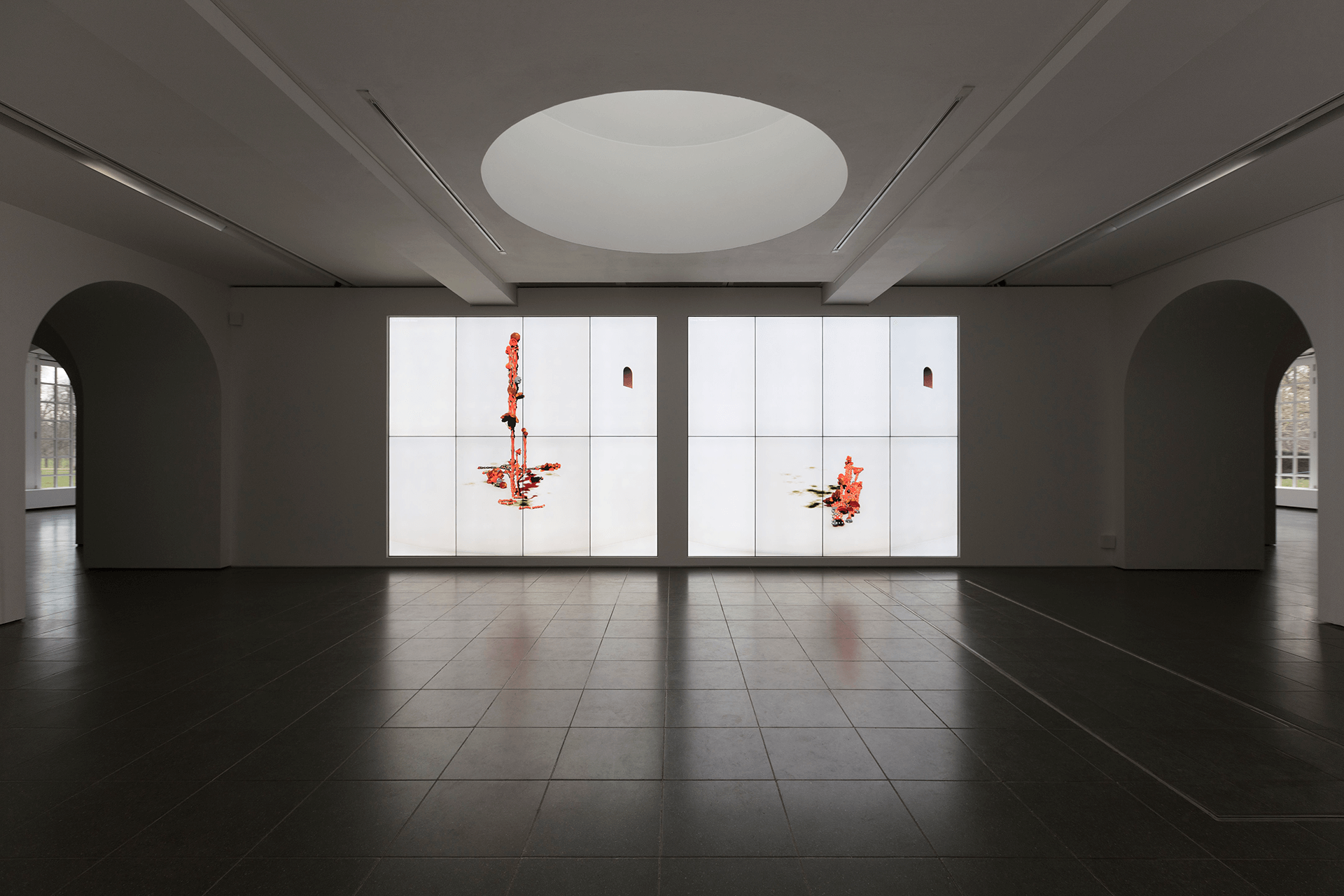

Ian Cheng - Bob - 2018

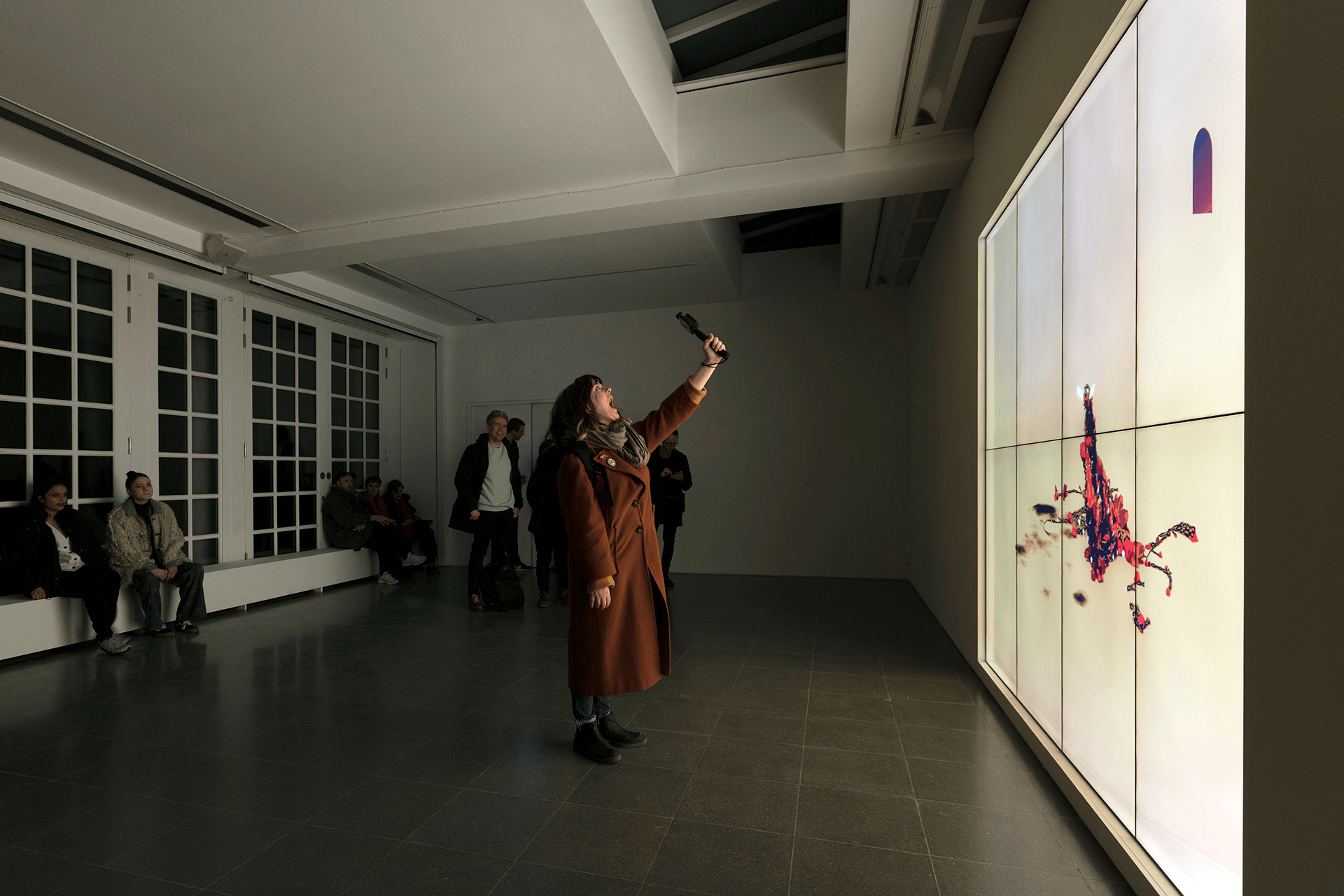

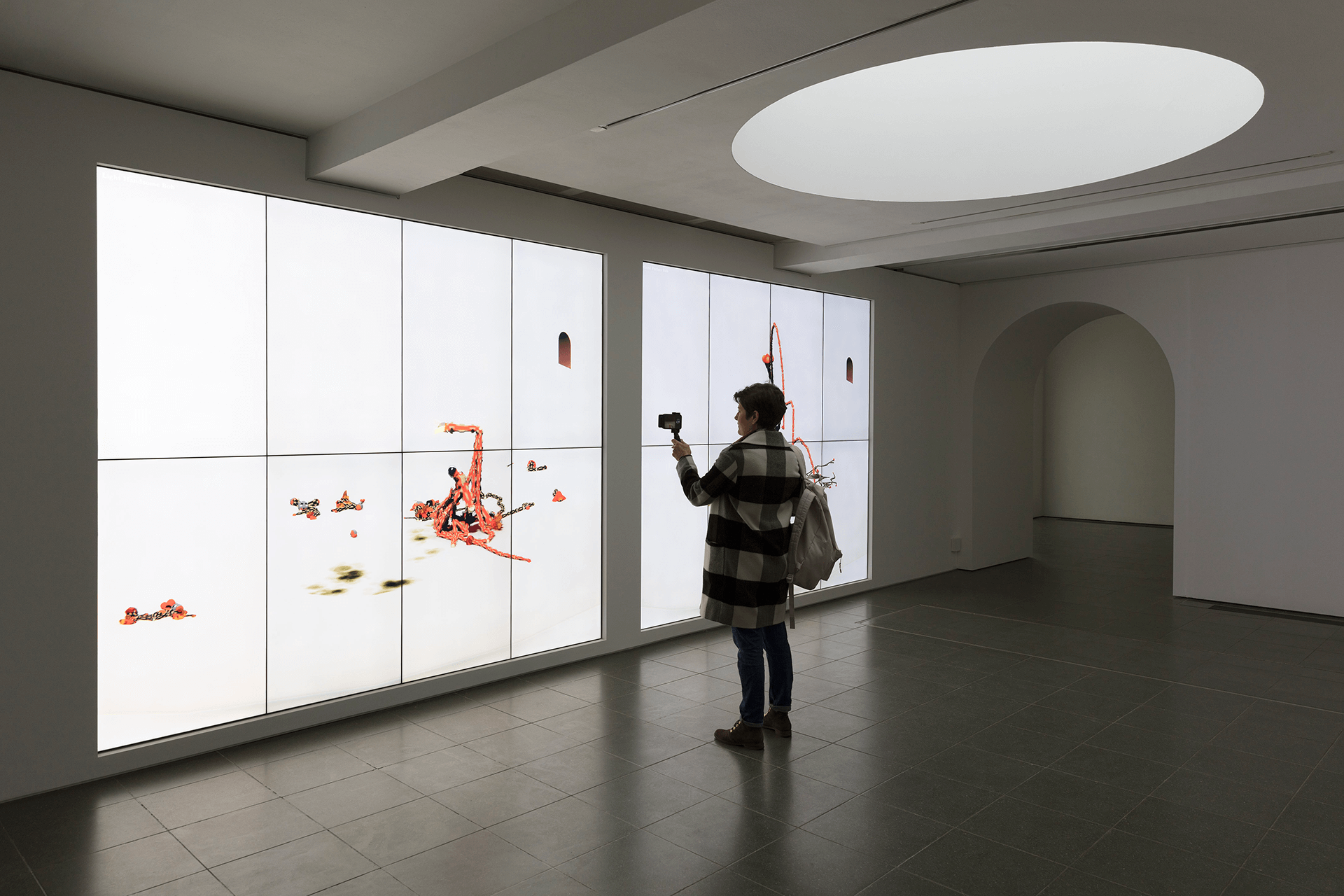

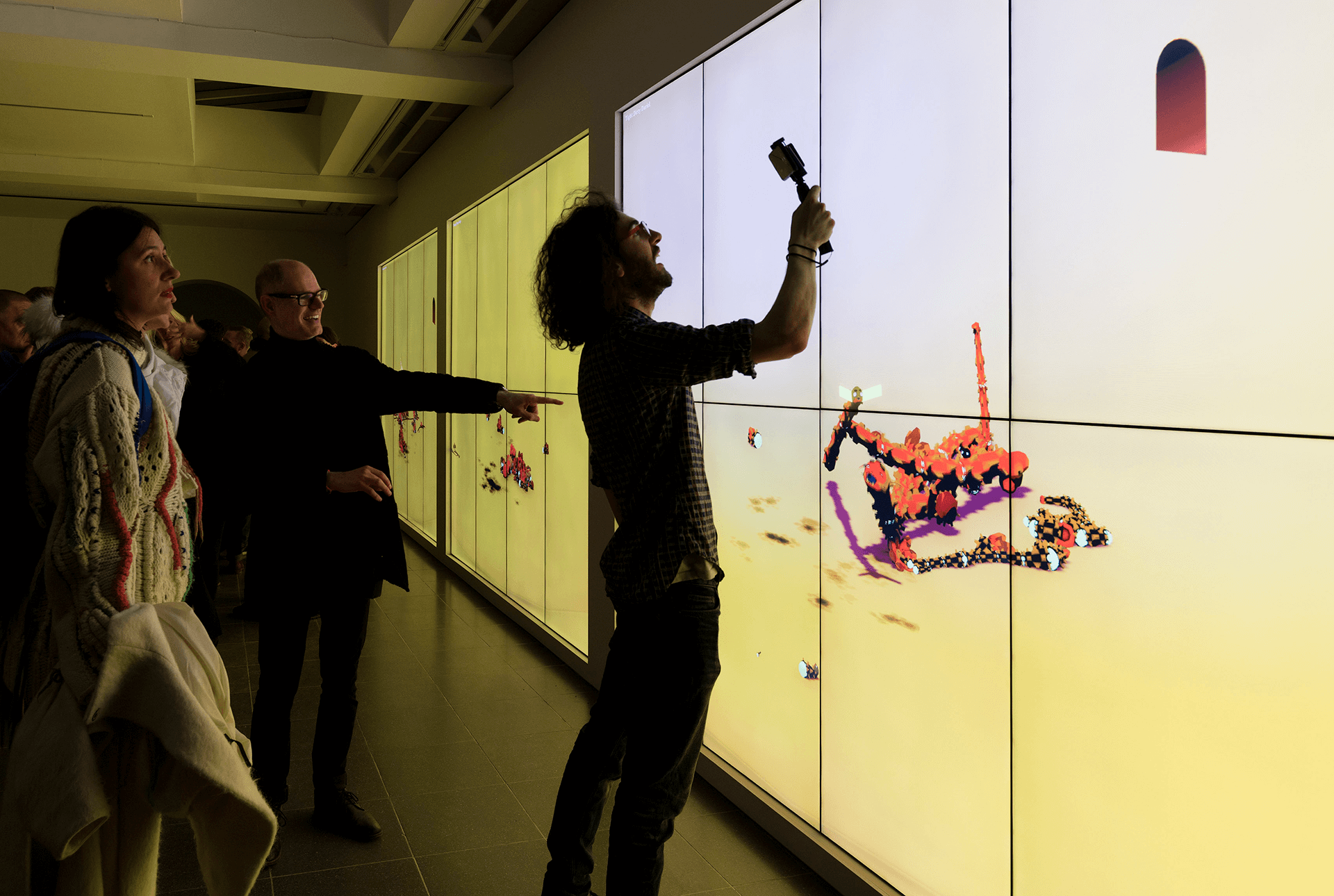

BOB stands for Bag of Beliefs. BOB is an artificial lifeform who enjoys the thrill of making his own path, and the growing pains of developing a generative attitude towards living. BOB's experiences solidify into personality traits and patterns of growth. The life story of BOB is persistent, irreversible, ageing, and consequential. Yet, when conditions are ripe, BOB may engage in activities powerful enough to change its mind and reshape its body.

Luxloop was approached by artist Ian Cheng to develop the tracking and interaction systems that would enable visitor to interact with affect BOB's AI.

Cheng’s work explores mutation, the history of human consciousness and our capacity as a species to relate to change. Drawing on principles of video game design, improvisation and cognitive science, Cheng develops live simulations – virtual ecosystems of infinite duration, populated with agents who are programmed with behavioral drives but left to self-evolve without authorial intent. Cheng was interested in exploring ways in which these simulations could become interactive and open to the influence of the viewers themselves.

Creating Presence in BOB's World

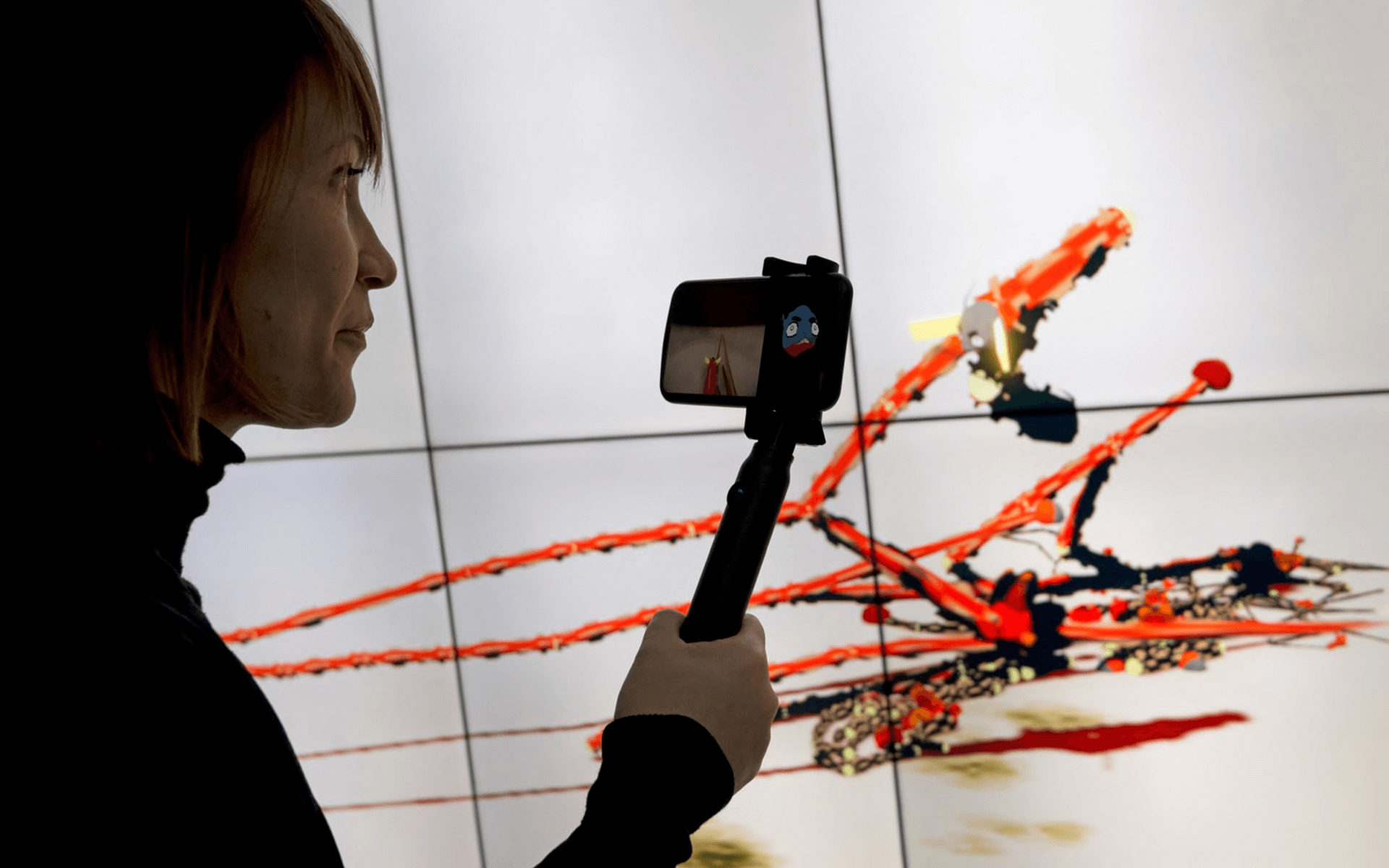

To bridge BOB's virtual space to the viewer's physical one, Luxloop implemented a custom tracking system that would allow visitors to move through the space of the gallery and see their movements reflected in the virtual space. Taking control by "possessing" one of BOB's heads, viewers could have a physical connection to BOB which would forever imprint on BOB's memories and affect future interactions.

This physical presence is further reinforced through the POV inside BOB's enclosure that is streamed back to the iPhone X in realtime. Luxloop developed a custom solution for compressing and streaming the virtual camera feed in BOB's world to the connected smartphone, as well as sending information back to BOB.

Understanding Emotional Cues

In addition to interacting through physical movement, BOB can perceive the viewer's outward emotional state, which in turn has an impact on whether BOB's memory of this interaction is positive or negative and may lead BOB to become agitated or calm. Using the iPhoneX depth camera, our custom app processes a detailed model of each viewer's face in realtime to infer emotion state, as well as mapping onto an animoji representing the way BOB sees each user.

Roles

- Spatial Tracking System

- Interaction Development

- Custom iOS Application

- Network Infrastructure

Tech

- iOS App

- Ultra Wide Band Location Tracking

- Real-time Facial emotion/expression parsing